Related Stories

Share this article

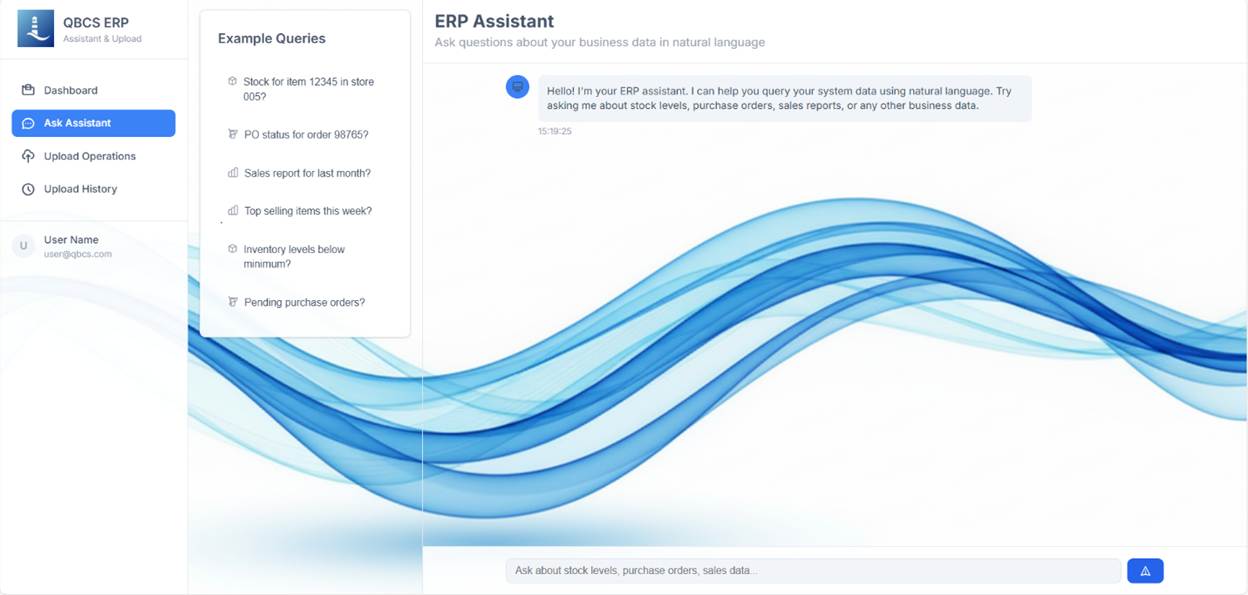

Why Every Retailer Should Have Their Self-Hosted LLM

As AI adoption accelerates across retail, one thing is becoming clear: generic, cloud-based AI tools aren’t always the best fit for every business need. For retailers looking to gain more control, speed, and security, self-hosted Large Language Models (LLMs) are emerging as a powerful alternative.

While public LLMs like ChatGPT or Claude are incredibly capable, they aren’t designed to run securely within your business. That’s where self-hosted models come in—lightweight, customizable language models that live entirely within your infrastructure, giving you full ownership of how AI is used across your organization.

First, What Is a Self-Hosted LLM?

A self-hosted LLM is a large language model that you run on your own hardware—either on-premise or in a rented cloud environment. You can choose from open-source models like Llama 3, Mistral, or Falcon, and fine-tune them with your company’s internal data.

Unlike public APIs, a self-hosted model never sends your prompts or data to a third party. It stays entirely in your environment, under your control.

Why Privacy Is the #1 Reason to Self-Host

Privacy is no longer just a compliance concern—it’s a strategic advantage.

Public LLM platforms reserve the right to learn from your inputs. In fact, as recently reported by PCMag (source), even OpenAI CEO Sam Altman confirmed that data sent to ChatGPT can be used to improve the model—unless you’re on a specific enterprise plan.

With a self-hosted LLM:

- Your customer data, product details, and internal IP never leave your environment

- There’s zero risk of your data being used to train someone else’s model

- You retain full control over retention, storage, and access

In sectors like retail, where customer trust is everything, that kind of control matters.

Ideal for Internal Processes and Daily Microtasks

Let’s be honest: your self-hosted LLM won’t outperform cloud-based giants in general reasoning or creative writing. But that’s not the point.

Self-hosted models are exceptionally good at small, repetitive, internal tasks where speed and security are critical:

- Answering policy and HR questions for staff

- Summarizing daily sales reports or customer feedback

- Generating product descriptions from structured data

- Assisting warehouse or store teams with inventory info

Because the model is close to the data and systems it interacts with, responses are faster, contextually accurate, and more secure.

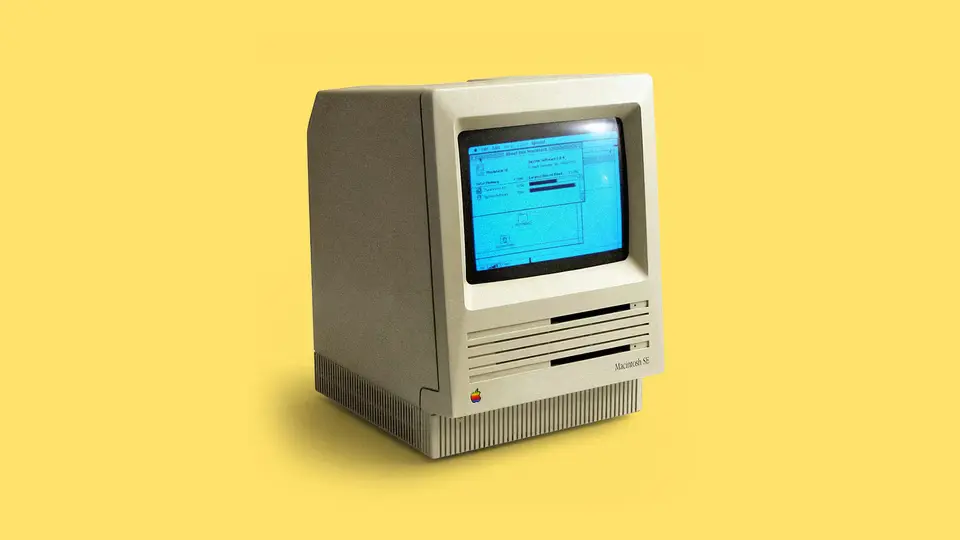

Self-Hosting Can Start Small—Even on Older Hardware

You don’t need a data center to run a useful LLM. Modern open-source models like Mistral 7B or TinyLlama can run efficiently on:

- A single GPU workstation

- Refurbished servers with decent RAM

- Or even a virtual machine in the cloud with rented VRAM

Yes, cloud-based LLMs are becoming cheaper to use—but with self-hosting, you control your costs. No unpredictable API bills, no latency, and no vendor lock-in.

You can even self-host in the cloud by renting GPU capacity, allowing flexible scaling without upfront hardware investments.

Understand the Technology—Empower Your Business

One of the most overlooked benefits of running your own LLM is the learning curve. When you host and fine-tune your own model, your team starts to understand:

- How prompts really work under the hood

- How model size, latency, and memory tradeoffs affect performance

- How to build smarter, safer workflows with AI at the center

That insight pays dividends—whether you continue self-hosting or start integrating third-party AI solutions. You’ll be in a stronger position to evaluate vendors, customize features, and build responsibly.

So, Should Every Retailer Self-Host an LLM?

Not for everything. But yes—for the right use cases.

Think of self-hosted LLMs as part of a broader retail AI toolkit. Use them to:

- Power internal tools with fast, secure, cost-effective intelligence

- Reduce the burden on support and operations teams

- Test and explore new ideas in a safe environment

And most importantly: use them to build AI literacy and ownership within your company, rather than relying entirely on external services.

Final Thoughts

The future of AI in retail isn’t one-size-fits-all. It’s hybrid.

Public cloud models will continue to dominate general-purpose use—but self-hosted LLMs give you the control, privacy, and understanding that retail organizations need to build competitive, responsible AI systems.

Whether you’re just starting or already experimenting with AI, now is the time to explore what’s possible when you bring LLMs in-house.

H3: Need help getting started with self-hosted AI?

QBCS helps retailers deploy self-hosted LLMs—on-prem, in the cloud, or in hybrid environments. We’ll help you choose the right model, train it on your data, and integrate it safely into your workflows.